| Hadoop Illuminated > Hardware and Software for Hadoop |  |

|---|

Table of Contents

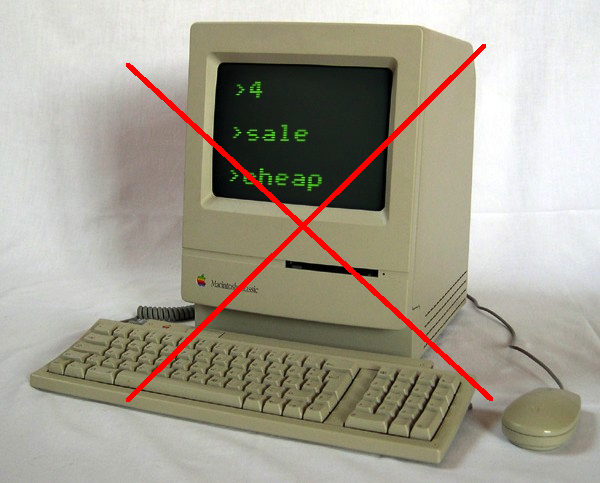

Hadoop runs on commodity hardware. That doesn't mean it runs on cheapo hardware. Hadoop runs on decent server class machines.

Here are some possibilities of hardware for Hadoop nodes.

Table 14.1. Hardware Specs

| Medium | High End | |

|---|---|---|

| CPU | 8 physical cores | 12 physical cores |

| Memory | 16 GB | 48 GB |

| Disk | 4 disks x 1TB = 4 TB | 12 disks x 3TB = 36 TB |

| Network | 1 GB Ethernet | 10 GB Ethernet or Infiniband |

So the high end machines have more memory. Plus, newer machines are packed with a lot more disks (e.g. 36 TB) -- high storage capacity.

Examples of Hadoop servers

So how does a large hadoop cluster looks like? Here is a picture of Yahoo's Hadoop cluster.

Hadoop runs well on Linux. The operating systems of choice are:

This is a well tested Linux distro that is geared for Enterprise. Comes with RedHat support

Source compatible distro with RHEL. Free. Very popular for running Hadoop. Use a later version (version 6.x).

The Server edition of Ubuntu is a good fit -- not the Desktop edition. Long Term Support (LTS) releases are recommended, because they continue to be updated for at least 2 years.

Hadoop is written in Java. The recommended Java version is Oracle JDK 1.6 release and the recommended minimum revision is 31 (v 1.6.31).

So what about OpenJDK? At this point the Sun JDK is the 'official' supported JDK. You can still run Hadoop on OpenJDK (it runs reasonably well) but you are on your own for support :-)